在做项目的时候,需要新引入一个外部依赖,于是很自然地在项目的 pom.xml 文件中加入了依赖坐标,然后进行编译、打包、运行,没想到直接抛出了异常:

1 | 2019-01-13_17:18:52 [sparkDriverActorSystem-akka.actor.default-dispatcher-5] ERROR actor.ActorSystemImpl:66: Uncaught fatal error from thread [sparkDriverActorSystem-akka.remote.default-remote-dispatcher-7] shutting down ActorSystem [sparkDriverActorSystem] |

任务运行失败,仔细看日志觉得很莫名奇妙,是一个 java.lang.VerifyError 错误,以前从来没见过类似的。本文记录这个错误的解决过程。

问题出现

在上述错误抛出之后,可以看到 SparkContext 初始化失败,然后进程就终止了;

完整日志如下:

1 | 2019-01-13_17:18:52 [sparkDriverActorSystem-akka.actor.default-dispatcher-5] ERROR actor.ActorSystemImpl:66: Uncaught fatal error from thread [sparkDriverActorSystem-akka.remote.default-remote-dispatcher-7] shutting down ActorSystem [sparkDriverActorSystem] |

错误日志截图:

根据日志没有看出有关 Java 层面的什么问题,只能根据 JNI 字段描述符:

1 | class: org/jboss/netty/channel/socket/nio/NioWorkerPool |

猜测是某一个类的问题,根据:

1 | method: createWorker signature: (Ljava/util/concurrent/Executor;) Lorg/jboss/netty/channel/socket/nio/AbstractNioWorker;) Wrong return type in function |

猜测是某个方法的问题,方法的返回类型错误。

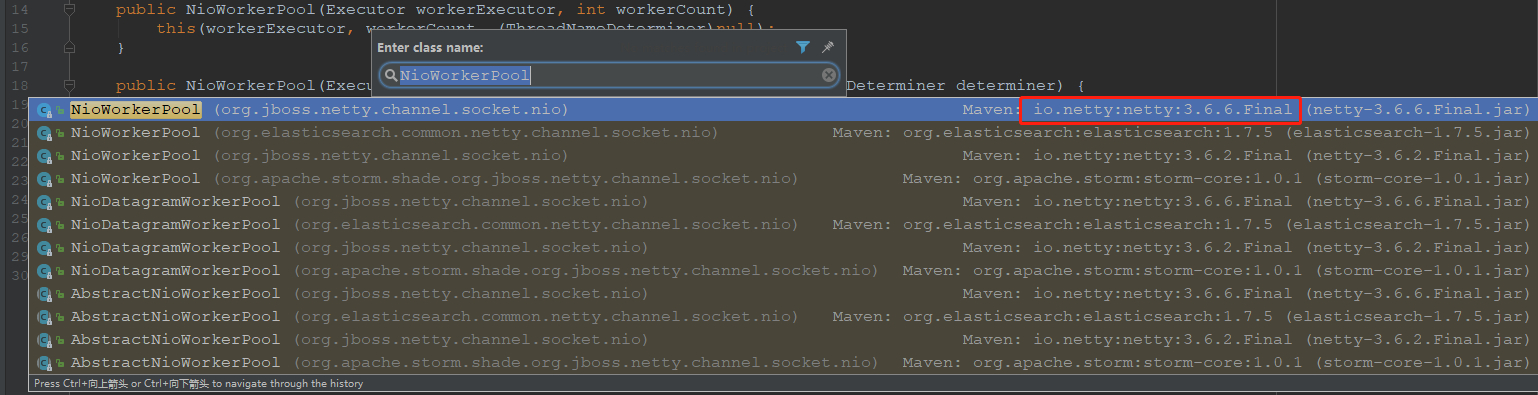

然后在项目中使用 ctrl+shift+t 快捷键(全局搜索 Java 类,每个人的开发工具设置的可能不一样)搜索类:NioWorkerPool,发现这个类的来源不是新引入的依赖包,而是原本就有的 netty 相关包,所以此时就可以断定这个莫名其妙的错误的原因就在于这个类的 createWorker 方法返回类型上面了。

搜索类 NioWorkerPool

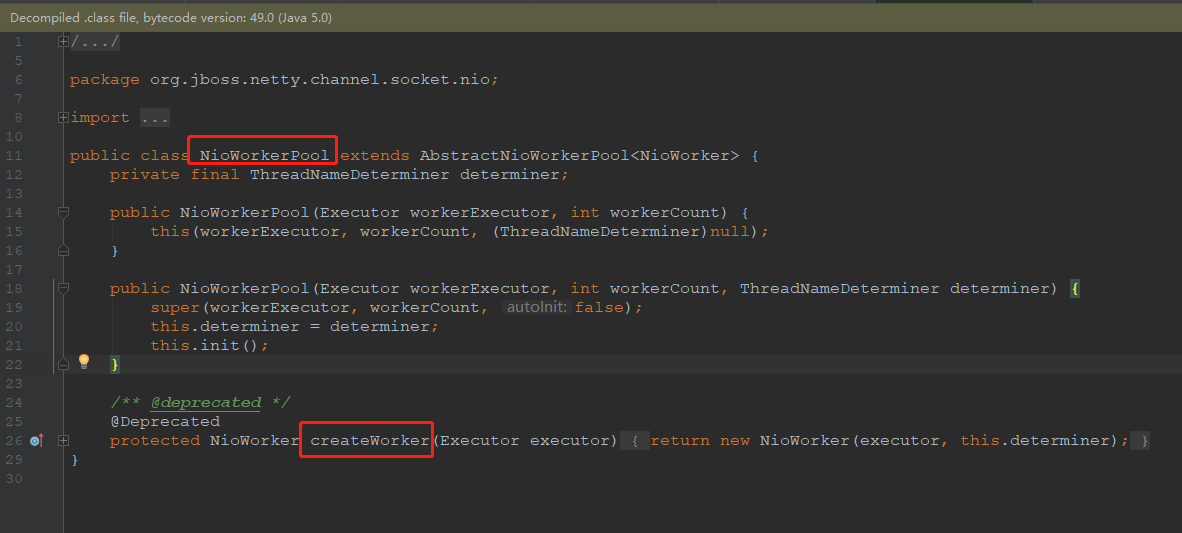

日志的 JNI 字段描述符显示返回类型是 AbstractNioWorker,但是这个一看就是抽象类,不是我们要找的,去类里面看源码,发现 createWorker 方法返回类型是 NioWorker:

类 NioWorkerPool 源码

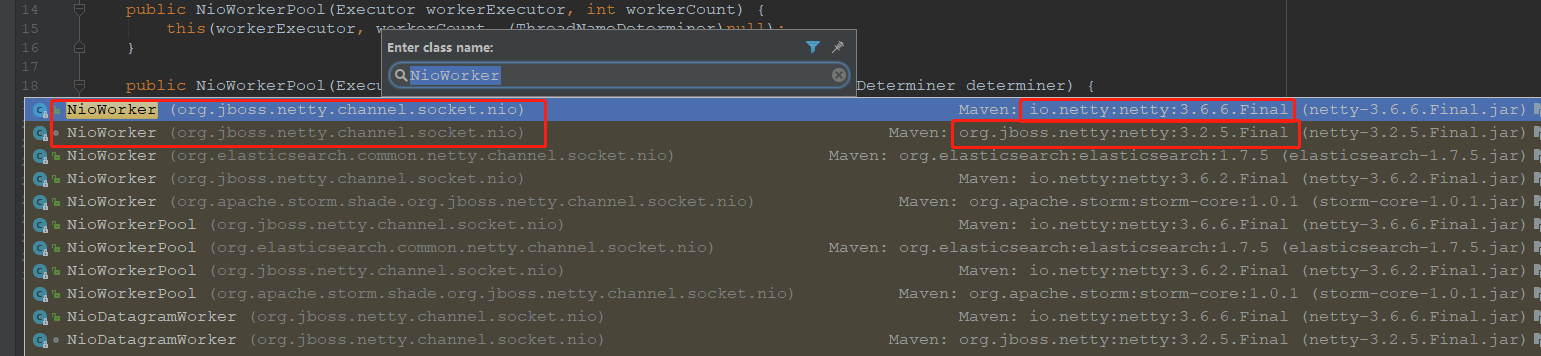

继续搜索类 NioWorker

好,此时发现问题了,这个类有 2 个,居然存在两个相同的包名,但是依赖坐标不一样,所以这个隐藏的原因在于类冲突,但是并不能算是依赖冲突引起的。也就是说,NioWorker 这个类重复了,但是依赖包坐标不一样,类的包路径却是一模一样的,不会引起版本冲突问题,而在实际运行任务的时候会抛出运行时异常,所以我觉得找问题的过程很艰辛。

使用依赖树查看依赖关系,是看不到版本冲突问题的,2 个依赖都存在:

io.netty 依赖

org.jboss.netty 依赖

于是又在网上搜索了一下,发现果然是 netty 的问题,也就是新引入的依赖包导致的,但是根本原因令人哭笑:netty 的组织结构变化,发布的依赖坐标名称变化,但是所有的类的包名称并没有变化,导致了这个错误。

问题解决

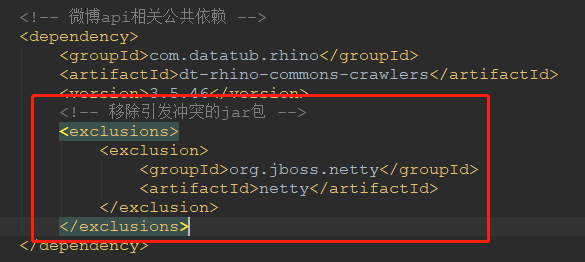

问题找到了,解决方法就简单了,移除传递依赖即可,同时也要注意以后再添加新的依赖一定要慎重,不然找问题的过程很是令人崩溃。

移除依赖

移除配置示例

1 | <!-- 移除引发冲突的 jar 包 --> |

问题总结

1、参考:https://stackoverflow.com/questions/33573587/apache-spark-wrong-akka-remote-netty-version ;

2、netty 的组织结构(影响发布的 jar 包坐标名称)变化了,但是所有的类的包名称仍然是一致的,很奇怪,导致我找问题也觉得莫名其妙,因为这不会引发版本冲突问题(但是本质上又是 2 个一模一样的类被同时使用,引发类冲突);

3、这个错误信息挺有意思的,解决过程也很好玩,边找边学习;

4、对于这种重名的类【类的包路径名、类名】,竟然对应的 jar 包不一样,这种极其特殊的情况也可以使用插件检测出来:

1 | <groupId>org.apache.maven.plugins</groupId> |

使用 enforcer:enforce 命令即可。

当然,这个插件还可以用来校验很多地方,例如代码中引用了 @Deprecated 的方法,也会给出提示信息,可以按照需求给插件配置需要校验的方面。